Microsoft Copilot real life scenarios – How to prevent oversharing?

Table of contents

With the introduction of Microsoft Copilot, these conversations have shifted from theoretical to practical as businesses worldwide begin implementing this cutting-edge technology into their operations.

As the co-founder and president of Syskit and Microsoft MVP for SharePoint and M365, I have been closely involved in deploying and evaluating Microsoft 365 Copilot from day one over the past six months. This blog post summarizes the most important lessons from my experience, offering insights and guidance for those struggling with their own AI journey with Microsoft Copilot.

I’ve also held sessions on this topic at various conferences across the world. The sessions were jam-packed, which just goes to show how the popularity and interest in AI are palpable in the offline world.

The initial promise of Microsoft Copilot

Microsoft Copilot promised a revolution in workplace productivity, leveraging the power of AI to enhance decision-making, automate routine tasks, and foster collaboration. I was intrigued by the potential of Copilot to transform how we interact with data and manage our workflows. The world today is full of different data sources, and Copilot will allow us to consume this data faster and with what is really important to us.

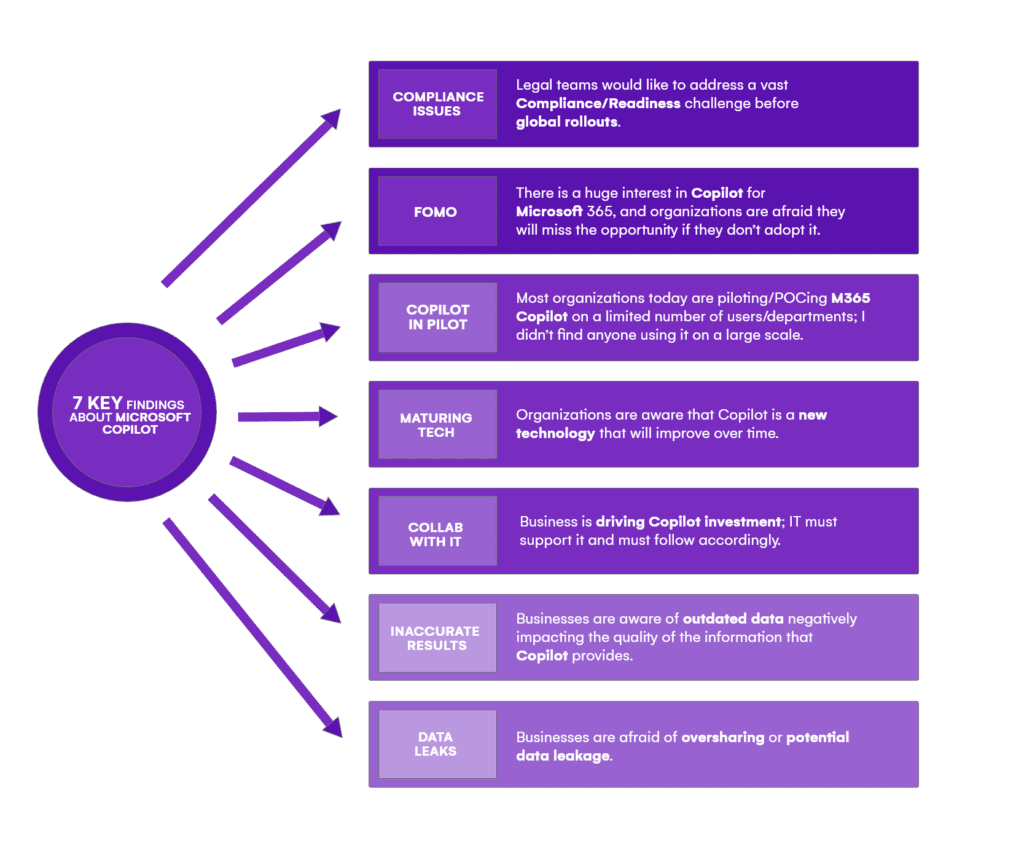

Key findings using Copilot from discussing challenges with the companies

Copilot compliance and readiness — The unseen challenges

Compliance is one of the most significant hurdles to the widespread adoption of Microsoft Copilot. Businesses are acutely aware of the risks associated with AI, particularly in terms of data privacy concerns and security. Legal teams are at the forefront of this challenge, working diligently to ensure that Copilot’s deployment aligns with regulatory requirements and company policies.

Copilot deployment challenges — Governance and User Experience

The deployment of Microsoft Copilot has not been without its challenges. Governance and user experience have emerged as two critical areas requiring attention. The lack of robust governance frameworks and inconsistent user experience (for example, you can generate pictures only in PPTX, you cannot reference a Word file, but why?!) across different Microsoft 365 apps have been significant obstacles to seamless integration.

The risk of AI inaccuracies

AI inaccuracies, or “AI hallucinations,” pose a real risk to businesses. Microsoft Copilot, while impressive, is not immune to these errors. From my experience, Copilot can occasionally produce incorrect or misleading outputs, which could have profound implications for business decisions and operations.

For example, what happens when you disable web browsing and you want to find out the dates for an important event for your work? Because the company files don’t contain much information about external events, Copilot is not able to provide answers to these questions.

The learning curve and user interaction with Microsoft Copilot

Adopting Microsoft 365 Copilot involves a steep learning curve. Users must learn to interact with the system effectively, crafting queries that elicit the most accurate and useful responses. This learning process is ongoing as Copilot continues to evolve and improve.

- Copilot use and internally promoting it has a steep learning curve.

- We need to understand how to talk to Generative AI, like Copilot, because this is not natural for most people.

- Be sure to provide perspective to fully take advantage of Microsoft Copilot’s capabilities. Pretend you are a PR/media analyst. When prompting, be specific about what you are asking and what you are expecting. Watch the format, set the tone, and double-check the accuracy.

- This causes Copilot to produce undesirable outputs, which users disregard, leaving them unsatisfied. Especially at the beginning, organizations using Copilot may not see their benefits immediately.

My recommendations for Microsoft Copilot deployment

Drawing from our experiences, we have developed a set of recommendations for organizations considering the deployment of Microsoft 365 Copilot:

- Educate stakeholders on the Copilot risks; don’t let them roll out ASAP because they want it.

- Make sure your M365 tenant has appropriate permissions in place.

- Make sure governance controls are in place.

a) This will avoid oversharing of sensitive information.

b) Don’t trust Copilot. Copilot is smart, but it has so-called hallucinations. - Over time, it gets better because Microsoft is constantly upgrading it behind the scenes.

- The better you describe what you want in the answer, the better the output. Be precise and eloquent in your questions.

- And/or data leakage of sensitive information.

My 6 recommendations for preventing oversharing in M365

- Check public M365 groups and Teams.

- Find Everyone and Everyone except the external users group on the SharePoint sites, and remove it.

- Review sharing settings, disable anyone sharing links.

- Check M365 Group and Teams sites for shadow users (users that are not in the group but have access to the underlying SharePoint site.

- Set the default sharing link setting to specific people and not companywide (companywide is the same as everyone but external users on the SharePoint site).

- Use Purview to apply sensitivity labels. Sensitivity labels add encryption and can restrict access to it, adding an extra layer of security.

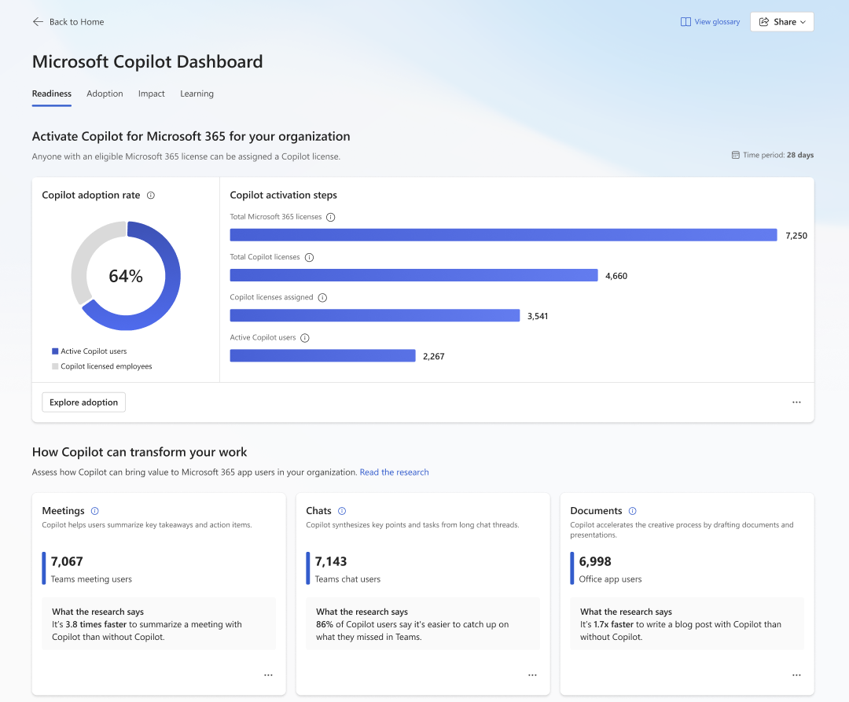

What can you do today for your users and check the Copilot adoption?

1. Microsoft Copilot for Microsoft 365 can access web content

As an IT admin, leave “Microsoft Copilot for Microsoft 365 can access web content” on. If the web content is not on your SharePoint, it might state that some things are not true because it cannot check data online for the latest info.

- This needs to be enabled by the end user in the Copilot. It happened to me; I missed this toggle!

- With this enabled, the quality of the reply, specifically for some public facts, is better.

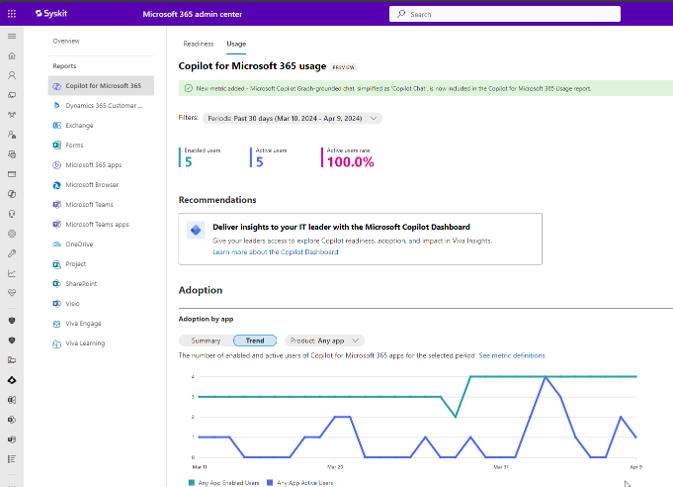

2. Reporting Tools – M365 admin

a) Microsoft 365 Admin Center Copilot Usage Reports

Provides readiness and usage reports and shows enabled M365 Copilot users vs active users over a 7, 30, 90, or 180 day period.

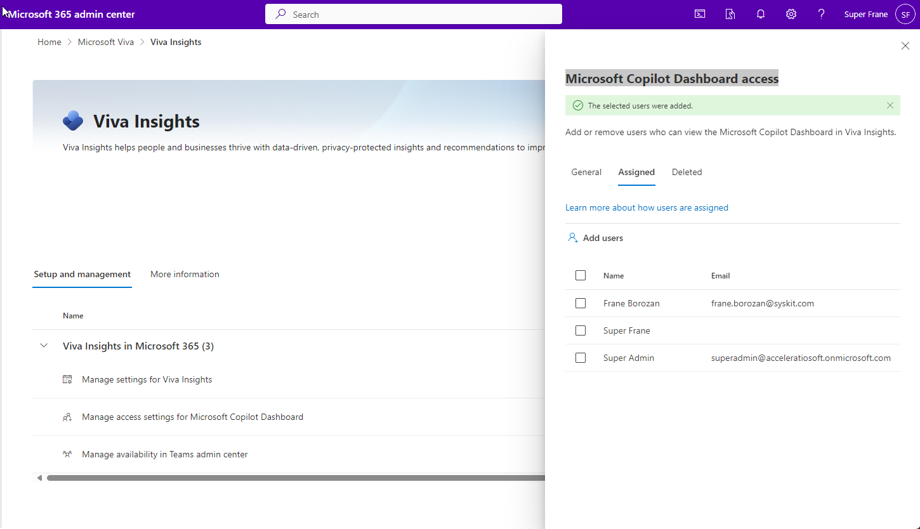

b) Viva Insights – Microsoft Copilot Dashboard access

First, you need to go to admin > settings > Viva > Viva Insights > Setup and management > manage access settings for Microsoft Copilot Dashboard and add appropriate users here.

Provides leaders and M365 admins additional visibility of M365 Copilot adoption by app:

- Teams

- Outlook

- Word, and by

- Feature (such as “summarize in a Team)

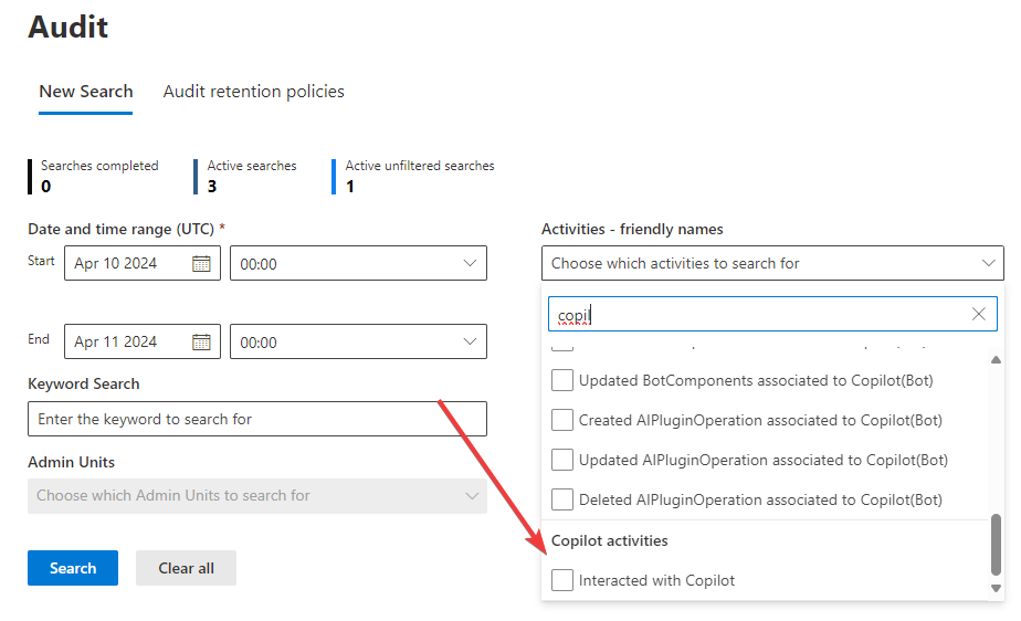

c) Purview Audit Log

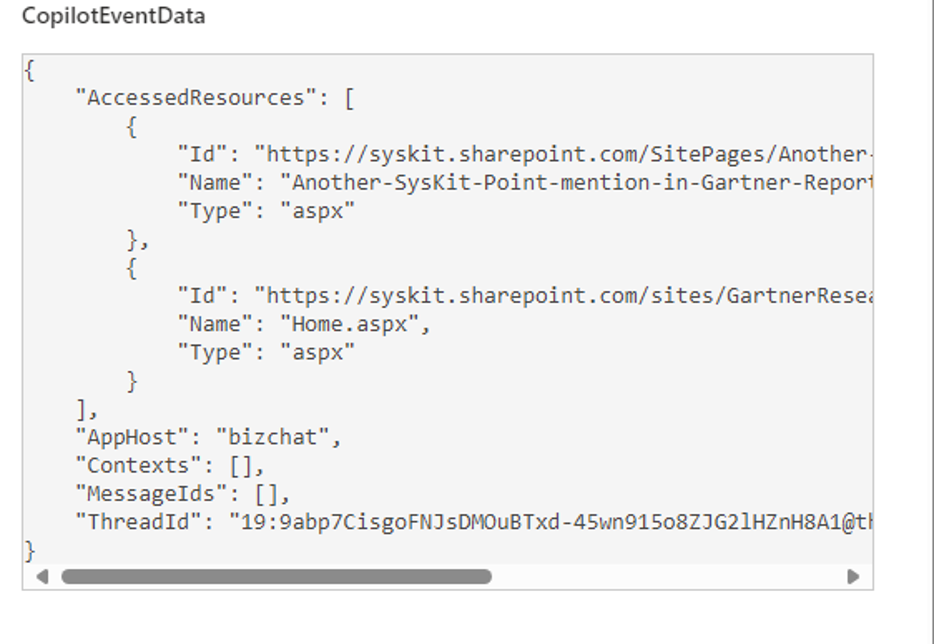

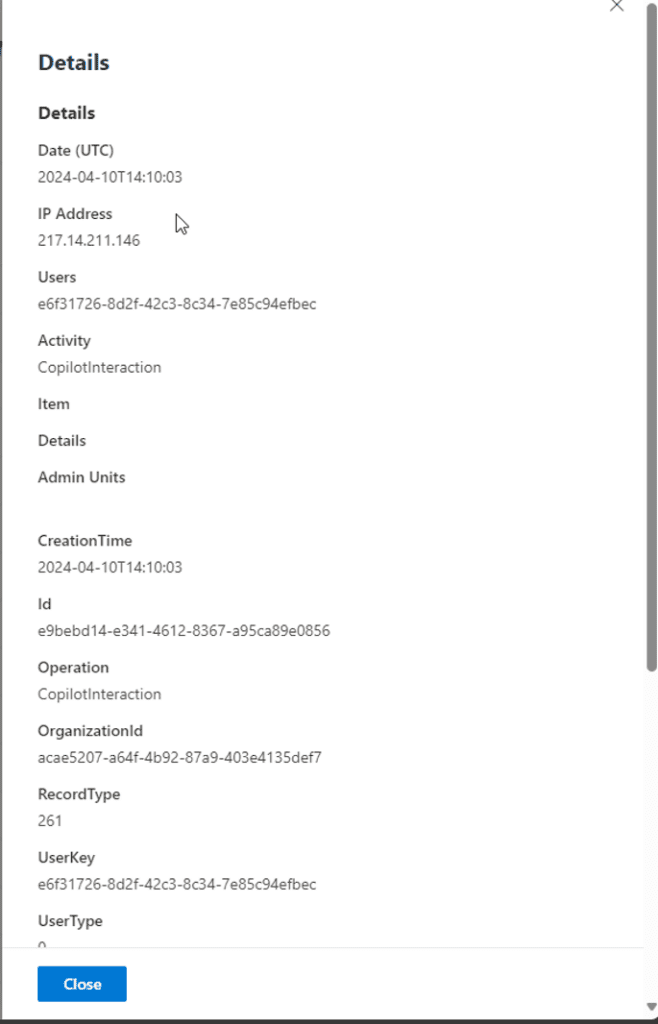

There is an audit log Copilot activity where you can track the event „interacted with Copilot” for all users.

Event details provide info like the time, and from where it was accessed (Teams, Word, etc).

Doesn’t tell you the query prompt (still!).

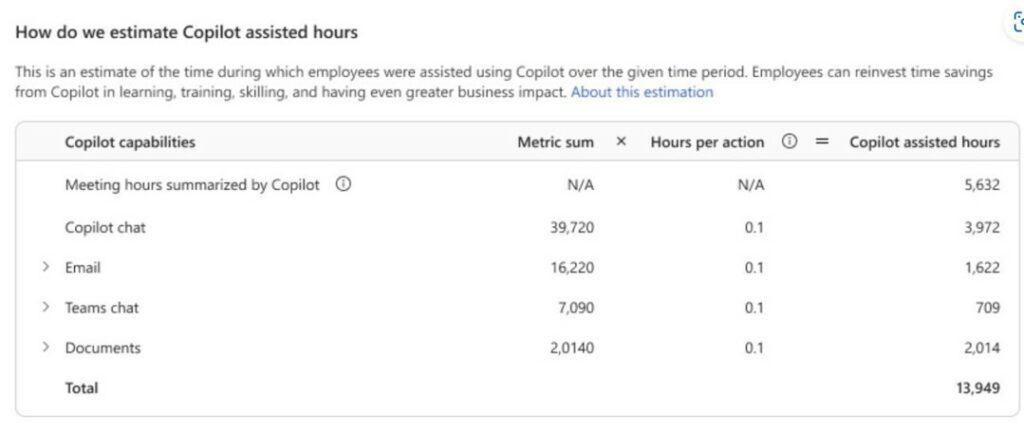

d) Copilot’s Return on Investment (ROI) – coming soon

Is Microsoft Copilot worth it?

As we look to the future, it is clear that Microsoft Copilot will play a significant role in shaping the digital workplace. The insights we have gained over the past six months are just the beginning of a much longer journey. Microsoft 365 Copilot has the potential to redefine productivity and collaboration, but it requires a strategic and thoughtful approach to fully realize its benefits.

Take the Copilot Readiness assessment

At Syskit, we developed the Copilot Readiness dashboard, which will provide you with complete visibility into potential security risks associated with the technology. So, if you’re starting to prepare your environment for implementing Microsoft Copilot, make sure to try out our free trial and see if you’re prepared for the deployment.